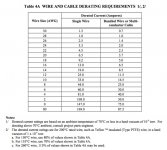

I read it as it is talking about the AMP capacity but missing the fact the longer the run the higher gauge you need as well due to voltage drop. But as the chart states. lets take AWG 6 with a rated insulation of 90 deg C. I could have a max of 75 amps. And this is with multiple conductors wrapped together. The reason for this is physics. Heat. How much heat can the cable take before total melt down. That is why people on here experience burnt conductors because full contact with the wire is not made and all of the power is going through a smaller portion of the wire thus creating too much heat and scorching everything.

Dan they have a 2011 version of NFPA 70 and the chart is updated.

So If I am completely out of the ball park Someone step in please.

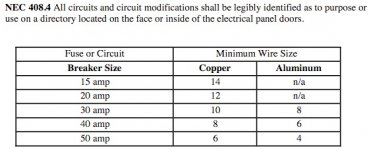

Well my memory is not as sharp as it was. 4ga is required if it were aluminum wire. Sorry about my memory. Here is the covering 2014 NEC Guide:

The different types of insulation used establishes the temperature rating of the wire.

Update:

After a while I remembered that it is the customer derating specification requirement that increased the gauge wire required The 6 gauge wire is derated to 60% of the rated current carrying capacity for single wire and 30% for bundled wire. Using the slash notes by the type of wire this is increased or decreased. Also note that Mil spec wire is good for 135C to 260C and you would not want to pay the cost for home use. This is from the old NASA spec, but the new ISO standards follow the old Mil and NASA standards. This is starting to make me remember what I had to do for everything when I was working. You don't have to wonder why government purchases cost so much. This is just the tip of the ice burg put on contractors and part suppliers. On case by case use rated at 44A max bundled for 4 gauge 260 degree Celsius rated wire could be approved for 50 amps. It depended on maximum ambiant temperature and length of the cable.